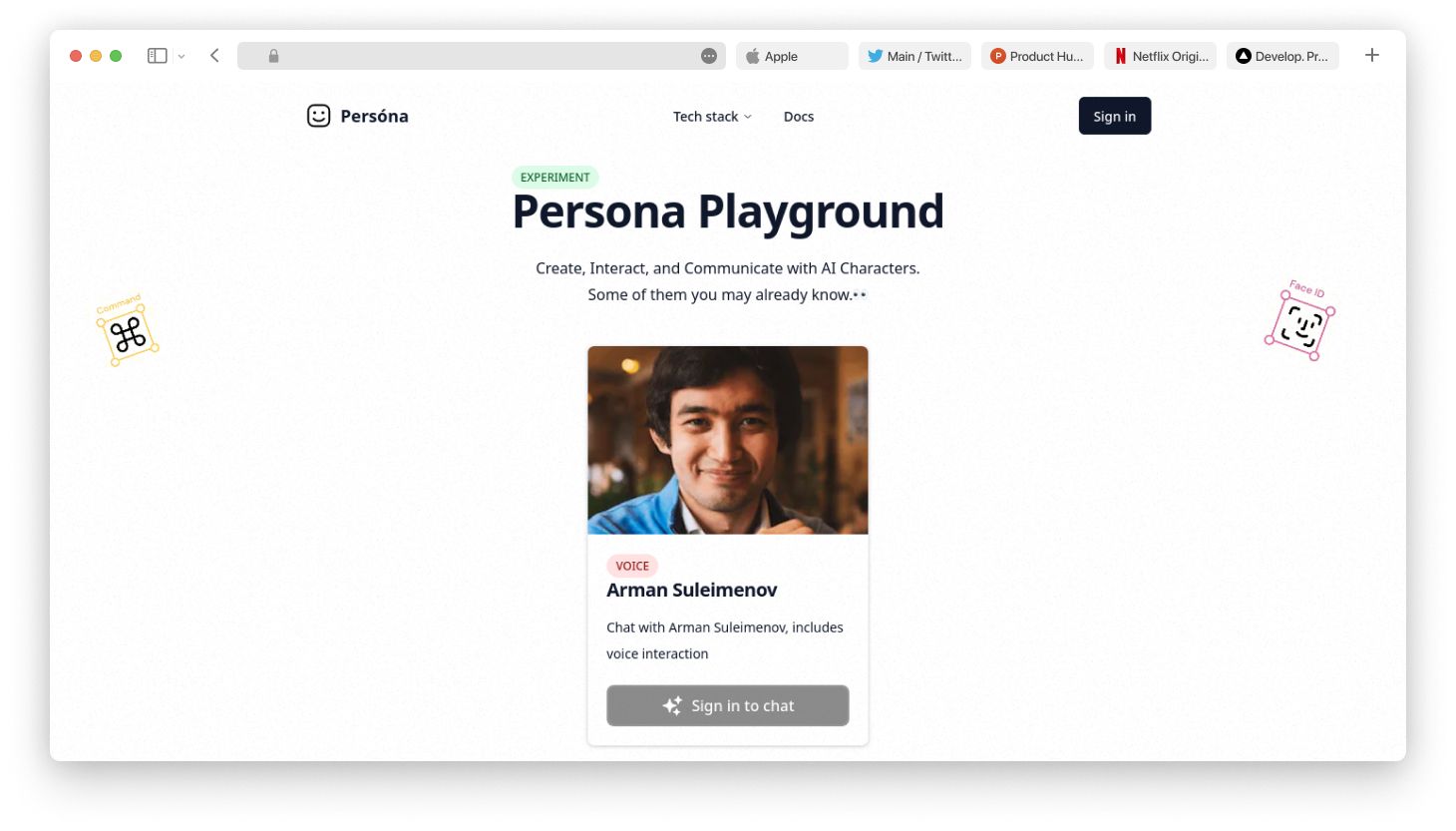

Project: Persóna

Website: persona.bkh.dev

GitHub: khajimatov/persona-ai

Persóna was created during the nFactorial AI Cup, this is a generative AI hackathon for developers, which took place from April 7 to 9, 2023. I participated in the App track, the goal was to create an app in 48 hours using any available generative AI.

My idea for an app was to create a place where users could interact with nFactorial founder Arman Suleimenov (including voice cloning + knowledge embedding). The app would allow users to have a conversation with a virtual version of Arman, who would answer questions and provide insights based on his knowledge and experiences. The app would use ElevenLabs to clone Arman’s voice and generate responses based on his past interviews and speeches(LangChain).

To see the end result, click the link at the top.

But let me tell you a little bit about the process of developing this project and what I was able to make of the original plan in those 48 hours and what I didn’t ;( and what I’ve learned from this experience.

Development process

⏱️ So the 48-hour countdown has begun.

First, the plan for creating Persóna was the following:

- Create a Web app (home page, chat UI etc.)

- Clone the voice using ElevenLabs.

- Explore solutions for creating embeddings for LLM.

- Combine all of this into one app.

After constructing the plan, I began creating the web app, chat UI, thinking about best ways for a user to interact with an app. What technologies am I going to use?

Scrolling through twitter, youtube and hackernews I usually make a list of interesting technologies that I would like to study or use in the future, and now in this project I decided to use those technologies.

So, the tech stack is the following:

- Next.js (pages)

- TypeScript

- Tailwind

- Prisma (ORM)

- tRPC (nice DX for APIs, client/server logic)

- Vercel

- PlanetScale (database)

also, I wanted to add auth so i used Clerk for it

- Clerk (authentication, login)

and OpenAI’s GPT-3.5-Turbo model as LLM for chatting

- GPT-3.5-Turbo

To kickstart the project I used create-t3-app(https://create.t3.gg) as a template, this comes with configured tRPC, Prisma, Tailwind, TypeScript, Next.js. I used shadcn-ui(https://ui.shadcn.com) as my design system/ui-library.

I thought about making the chat interface/interaction to look like a native app, and used a few css things like svh, dvh, lvh to make the app on mobiles nice to use, and to make the messages have some animation I added transitions on send and receive, to make interaction little bit fun when user sends or receives a message the “tick” sound will play. The app will scroll the view on each new message so the user will always see the latest messages first. This was my plan for the chat UI.

Now let’s talk about the voice cloning part.

I found out about ElevenLabs from Twitter and decided to clone Arman’s voice using it. Unfortunately, I couldn’t find any of Arman’s speeches in English to use for cloning (voice cloning works well only in english), but when I approached Arman told him about it, it turned out that he had some recorded videos from his past podcasts/talks in english and shared these with me (so I could use them as embeds for LLM).

I cut out the right parts of the video to clone the voice and got impressive results on the first try, so I even thought I might call it a day, start integrating voice into app and not do the embedding, because cloned voice quality was great and I thought that this might be enough to win hackathon. But I decided that there was plenty of time and I’d have time to finish everything I had planned to do.

to be continued…